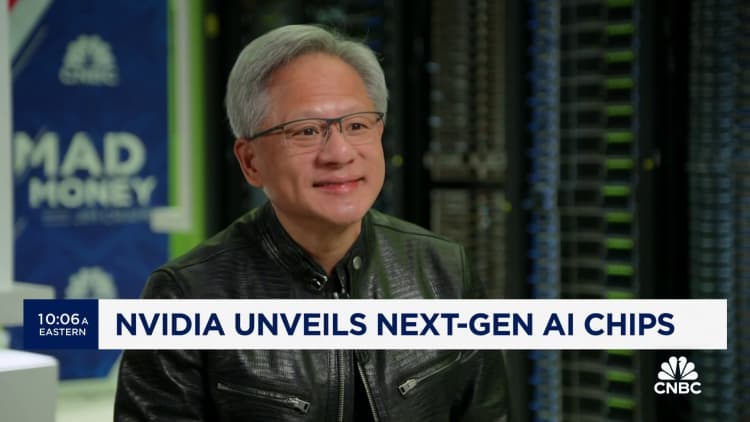

Nvidia’s next-generation graphics processor for synthetic intelligence, known as Blackwell, will price between $30,000 and $40,000 per unit, CEO Jensen Huang instructed CNBC’s Jim Cramer.

“This can price $30 to $40 thousand {dollars},” Huang stated, holding up the Blackwell chip.

“We needed to invent some new expertise to make it potential,” he continued, estimating that Nvidia spent about $10 billion in analysis and growth prices.

The worth means that the chip, which is more likely to be in scorching demand for coaching and deploying AI software program like ChatGPT, will probably be priced in an analogous vary to its predecessor, the H100, or the “Hopper” technology, which price between $25,000 and $40,000 per chip, based on analyst estimates. The Hopper technology, launched in 2022, represented a big value improve for Nvidia’s AI chips over the earlier technology.

Nvidia CEO Jensen Huang compares the scale of the brand new “Blackwell” chip versus the present “Hopper” H100 chip on the firm’s developer convention, in San Jose, California.

Nvidia

Nvidia declares a brand new technology of AI chips about each two years. The newest, like Blackwell, are usually quicker and extra power environment friendly, and Nvidia makes use of the publicity round a brand new technology to rake in orders for brand spanking new GPUs. Blackwell combines two chips and is bodily bigger than the previous-generation.

Nvidia’s AI chips have pushed a tripling of quarterly Nvidia gross sales because the AI growth kicked off in late 2022 when OpenAI’s ChatGPT was introduced. A lot of the prime AI corporations and builders have been utilizing Nvidia’s H100 to coach their AI fashions over the previous 12 months. For instance, Meta is shopping for a whole lot of hundreds of Nvidia H100 GPUs, it stated this 12 months.

Nvidia doesn’t reveal the listing value for its chips. They arrive in a number of completely different configurations, and the value an finish client like Meta or Microsoft would possibly pay is dependent upon elements comparable to the quantity of chips bought, or whether or not the client buys the chips from Nvidia straight by way of a whole system or by way of a vendor like Dell, HP, or Supermicro that builds AI servers. Some servers are constructed with as many as eight AI GPUs.

On Monday, Nvidia introduced at the least three completely different variations of the Blackwell AI accelerator — a B100, a B200, and a GB200 that pairs two Blackwell GPUs with an Arm-based CPU. They’ve barely completely different reminiscence configurations and are anticipated to ship later this 12 months.